We are a digital agency helping businesses develop immersive, engaging, and user-focused web, app, and software solutions.

2310 Mira Vista Ave

Montrose, CA 91020

2500+ reviews based on client feedback

What's Included?

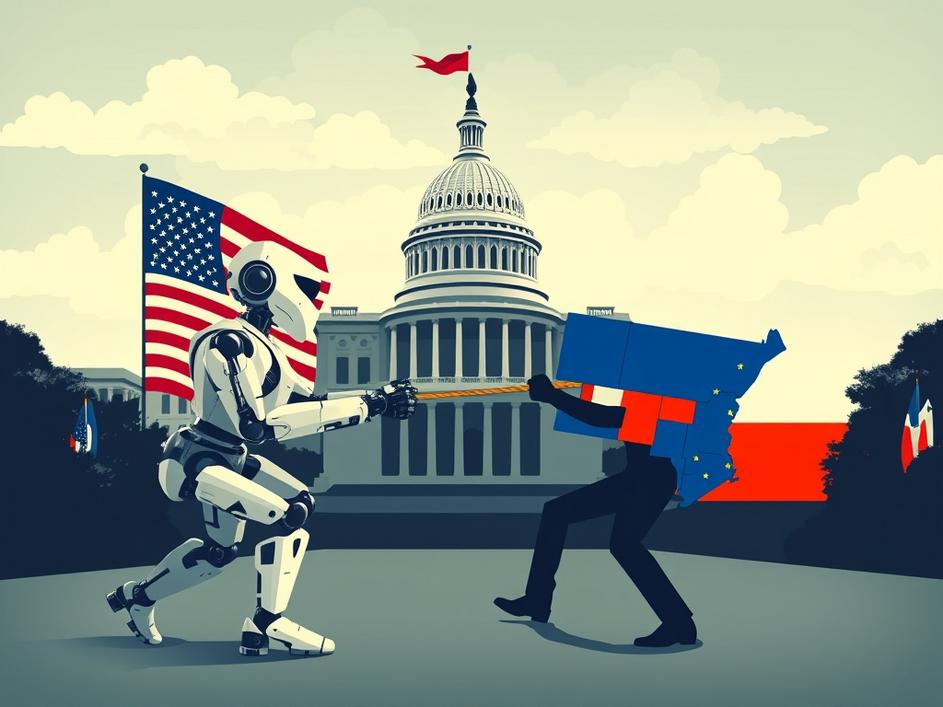

ToggleThe latest news suggests that former President Trump is again trying to stop states from making their own rules about artificial intelligence. A draft executive order is circulating, and if it becomes official, it could mean that only the federal government gets to decide how AI is regulated across the country. This push for federal control raises some pretty big questions about who should be in charge of keeping AI safe and fair.

AI is rapidly changing many parts of our lives, from how we get healthcare to whether we get a loan. Because it’s so new and changes so quickly, there’s a lot of debate about how to keep it from causing harm. Some people think the federal government should set the rules to ensure everyone follows the same standards. Others believe that states should have the power to create their own rules that fit their specific needs and values. This executive order brings that argument to the forefront.

Those who support federal regulation of AI often say that it’s the best way to create consistent standards. They argue that a patchwork of state laws could create confusion and make it harder for companies to innovate. A single set of federal rules could make it easier for businesses to develop and deploy AI technologies across the country without having to worry about following different rules in each state. Also, they think the federal government has the resources and expertise to understand and manage the complex issues that AI raises.

On the other hand, many argue that states should have the power to regulate AI because they can be more responsive to local needs and concerns. Different states may have different priorities and values, and state-level regulation allows them to tailor AI rules to fit their specific circumstances. For example, a state with a large agricultural sector might want to focus on regulating AI in farming, while a state with a big tech industry might be more concerned about AI in software development. State control also allows for more experimentation and innovation. States can try different approaches to AI regulation, and the successful ones can be adopted by other states or even at the federal level.

A big worry about stopping states from regulating AI is that it could create safety risks. Without strong rules, AI systems could be used in ways that harm people or discriminate against certain groups. For example, AI used in hiring could unfairly screen out qualified candidates, or AI used in criminal justice could perpetuate existing biases. If states can’t step in to address these issues, there’s a risk that these problems will go unchecked. There are real concerns that the rush to embrace AI technology could come at the expense of ethical considerations and public safety.

The core issue here is finding the right balance between encouraging innovation and protecting people from potential harm. Too much regulation could stifle the development of new AI technologies, while too little regulation could lead to serious consequences. The debate over federal vs. state control is really a debate about how to strike this balance. Federal control could provide more consistency and certainty, but it could also be less flexible and responsive to local needs. State control could allow for more innovation and customization, but it could also create confusion and inconsistency.

It’s important to look at this issue within the current political context. The push for federal control of AI regulation is likely driven by a desire to promote economic growth and maintain American competitiveness in the global AI race. Supporters of this approach may believe that a unified federal strategy is the best way to achieve these goals. However, critics argue that this approach could prioritize economic interests over ethical considerations and public safety. They may also be concerned about the potential for federal overreach and the erosion of state autonomy.

This executive order, if finalized, will set off a big debate about the future of AI regulation in the United States. It will likely face legal challenges from states that want to maintain their regulatory power. And it will spark a broader conversation about the role of government in shaping the development and deployment of AI. There are already differing opinions about the right way forward. Navigating these conflicting viewpoints will be a crucial step in ensuring that AI benefits everyone.

Ultimately, there’s no easy answer to the question of who should regulate AI. Both the federal government and the states have valid arguments for their respective roles. The challenge is to find a way to balance the need for consistency and innovation with the need for flexibility and local responsiveness. A possible solution could be a collaborative approach where the federal government sets broad guidelines and standards, while states have the flexibility to adapt these rules to their specific circumstances. It is imperative that decisions regarding AI reflect an awareness of the technology’s vast influence and potential consequences.

Comments are closed