We are a digital agency helping businesses develop immersive, engaging, and user-focused web, app, and software solutions.

2310 Mira Vista Ave

Montrose, CA 91020

2500+ reviews based on client feedback

What's Included?

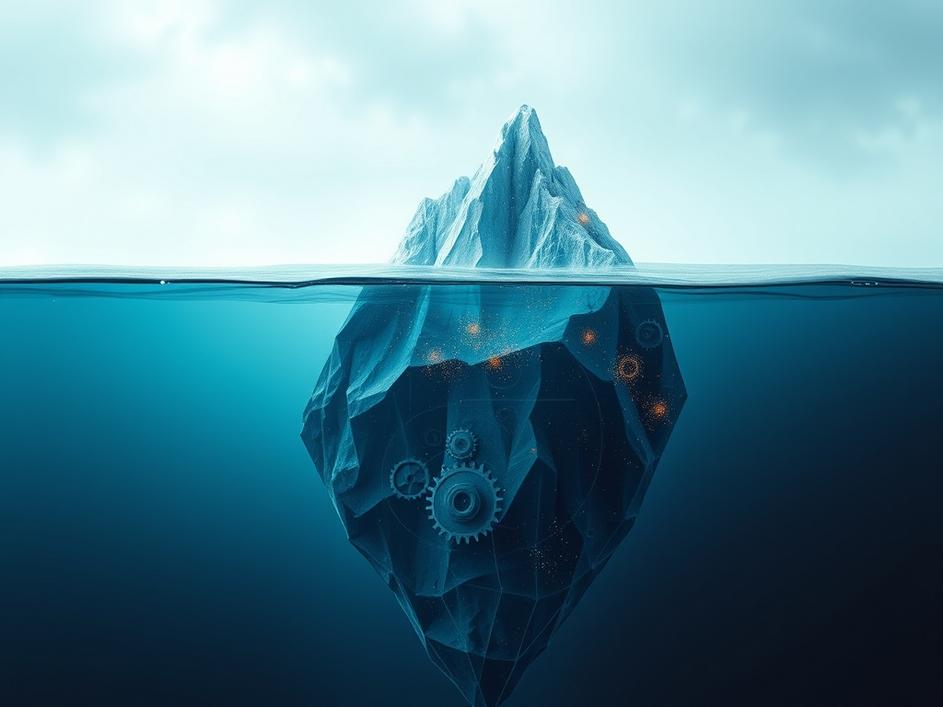

ToggleWe all hear about AI changing things. But what about the quiet costs? Most people think about the fees for using AI, like sending it a prompt and getting an answer. That’s just the tip of the iceberg. There’s a whole lot of work happening behind the scenes that we don’t usually see. Imagine you ask an AI a tough question. It doesn’t just pull an answer from thin air. It processes, considers, and weighs options. And all that internal thinking? It costs money, in a way most of us haven’t really thought about before. This unseen cost is what’s starting to shake things up in the world of generative AI.

So, what exactly is this hidden cost? Think of it like this: when you ask an AI to create something, say a story or a complex image, it doesn’t just spit out the first idea that comes to mind. It often explores many different paths internally. It might try out a few sentence structures, check for logical consistency, or consider various artistic styles before giving you the final output. Each of these internal ‘checks’ or ‘considerations’ uses up computational resources. Developers are calling these internal steps ‘thinking tokens’ – not actual tokens you pay for directly in the same way as input/output, but a good way to describe the computational effort. The more ‘thought’ an AI puts into something, the more of these internal steps it takes, and the more costly the process becomes for the people running the AI. It’s like a person deeply pondering a tricky problem; their brain uses more energy than if they were just doing a simple task.

This concept of ‘thinking tokens’ isn’t just an interesting fact; it has a real financial impact. It means that the overall cost of running and developing advanced generative AI models is going up. If an AI needs to be more accurate, more creative, or more robust in its answers, it has to ‘think’ more. More thinking means more internal computations, and more computations mean higher costs for the AI companies. This isn’t just about paying more for the words you type in or get back. It’s about the infrastructure, the processing power, and the energy needed to support that deeper level of AI reasoning. This unseen inflation could mean that AI services become more expensive for us users, or it might slow down the development of new, highly capable AI because the research and development costs balloon. It’s a fundamental shift in the economic model of AI that many didn’t predict.

So, who really cares if AI models are getting more expensive to run internally? Well, everyone, eventually. For big tech companies, it means their margins might shrink, or they might have to pass those costs onto their customers. For smaller startups trying to build innovative AI tools, these rising ‘thinking costs’ could be a huge barrier. It might become too expensive to develop AI that performs complex, nuanced tasks, pushing them toward simpler, less ‘thoughtful’ solutions. This could lead to a future where only the biggest players can afford truly intelligent AI, limiting competition and innovation. It also raises questions about accessibility. Will cutting-edge AI become a luxury service, or will developers find new ways to make AI ‘think’ smarter, not just harder, to keep costs down? This challenge isn’t just about money; it’s about the future shape of the AI landscape and who gets to participate in it.

From my point of view, this ‘thinking token’ inflation is a crucial wake-up call. It highlights that raw processing power isn’t the only metric that matters for AI anymore. We need AI that can think smarter, not just more. Imagine a person who can solve a complex puzzle quickly because they see patterns others miss, instead of someone who tries every single combination. That’s the kind of efficiency AI developers need to strive for. This means building models that are better at focusing their internal ‘thought’ on the most relevant paths, pruning unproductive lines of inquiry, and learning from past reasoning efforts. It’s about developing more elegant algorithms and architectures that achieve deep understanding with fewer internal steps. This challenge will push AI research in exciting new directions, forcing innovators to think about efficiency and cost as deeply as they think about capability. It’s not just about making AI do more; it’s about making it do more with less wasted thought.

The reality is, there will always be a trade-off. Sometimes, a task demands extensive internal deliberation for accuracy, safety, or creativity. Think about AI designing a new drug or diagnosing a rare illness – you want it to ‘think’ deeply and consider every angle. For other tasks, like quickly generating a simple email draft, speed and lower cost might be more important than exhaustive internal processing. The trick for AI developers and users alike will be understanding this balance. We need to define what level of ‘thought’ is truly necessary for different applications. This means asking: How much internal processing is enough? How much is too much? And how can we measure and optimize this internal thinking to get the best results without breaking the bank? It’s a new layer of complexity in managing AI systems, but one that’s vital for sustainable growth.

So, the next time you use a generative AI, remember there’s more to its cost than just the words it processes or the images it creates. There’s a whole invisible world of ‘thinking’ happening inside, and that internal effort has a real price tag. This concept of ‘thinking tokens’ makes us look at AI’s economics differently, pushing us to consider efficiency and intelligence in new ways. It means we’re moving beyond just building bigger, faster models to building smarter, more resource-aware ones. Understanding these hidden costs isn’t just for AI insiders; it’s key for anyone who wants to grasp the true implications of this powerful technology and help shape its future. It’s a reminder that even in the digital realm, nothing truly comes for free, especially deep thought.

Comments are closed