We are a digital agency helping businesses develop immersive, engaging, and user-focused web, app, and software solutions.

2310 Mira Vista Ave

Montrose, CA 91020

2500+ reviews based on client feedback

What's Included?

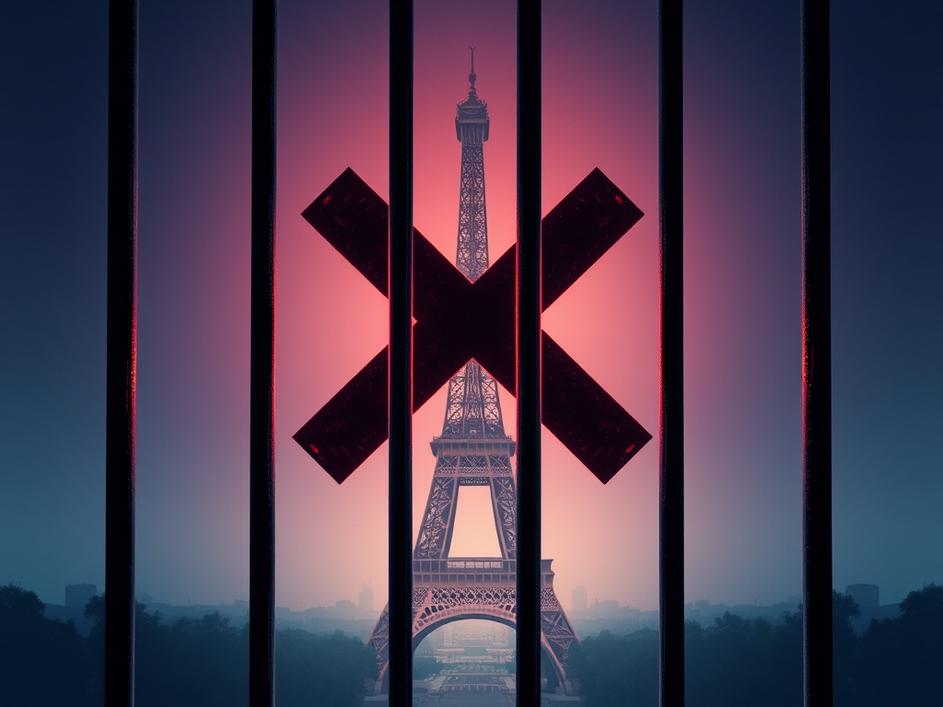

ToggleThis week, the Paris prosecutor’s cybercrime unit conducted a raid on the offices of X, the social media platform formerly known as Twitter. This action signals a significant escalation in the scrutiny faced by the company, particularly concerning its handling of deepfakes and child safeguarding online. It raises important questions about the responsibilities of social media giants in policing their platforms.

The rise of deepfakes poses a serious threat to individuals and society as a whole. These AI-generated videos and audio recordings can convincingly mimic real people, making it difficult to distinguish between what is real and what is fabricated. The potential for misuse is vast, ranging from political manipulation to reputational damage. Social media platforms like X, with their massive reach, can become breeding grounds for the rapid dissemination of deepfakes, exacerbating the problem. Regulators are concerned about how X is addressing this challenge.

Child safeguarding is another paramount concern. Social media platforms must take proactive steps to prevent the exploitation and abuse of children online. This includes removing harmful content, identifying and reporting potential cases of abuse, and implementing measures to protect children from grooming and other online dangers. The Paris cybercrime unit’s investigation suggests that X may not be doing enough to meet these obligations, prompting a deeper look into their practices.

So far, X has not issued a detailed public statement regarding the raid. It remains to be seen how the company will respond to the concerns raised by the Paris prosecutor’s office. However, it is clear that X faces mounting pressure to demonstrate a commitment to addressing the issues of deepfakes and child safety. This could involve investing in new technologies to detect and remove harmful content, strengthening its reporting mechanisms, and collaborating with law enforcement agencies.

The raid on X’s offices is not an isolated incident. It reflects a growing global concern about the responsibilities of social media platforms in addressing harmful content and protecting users. Other companies, like Meta (Facebook) and TikTok, have faced similar scrutiny and regulatory pressure. It’s a challenge that all platforms must face head-on. The Paris raid underscores the need for a comprehensive approach that involves collaboration between governments, social media companies, and civil society organizations.

One of the key challenges is the lack of clear regulations and consistent enforcement regarding online content. Governments around the world are grappling with how to balance the need to protect free speech with the need to prevent the spread of harmful content. The European Union’s Digital Services Act (DSA) is one example of an attempt to address this challenge. But more needs to be done to clarify the rules of the road and ensure that social media platforms are held accountable for their actions.

AI can be a double-edged sword in this context. On the one hand, it can be used to create deepfakes and spread misinformation. On the other hand, it can also be used to detect and remove harmful content. Social media platforms are increasingly relying on AI-powered tools to moderate content, but these tools are not perfect. They can sometimes make mistakes, such as removing legitimate content or failing to detect harmful content. There’s a lot of debate about how to improve AI moderation systems and make them more accurate and fair. It is also critical to consider the ethics around AI’s use in content moderation, as it must be done in a way that respects freedom of speech.

While social media platforms have a crucial role to play, users also have a responsibility to be critical consumers of information and to report harmful content when they see it. Media literacy education is essential to help people distinguish between real and fake content and to avoid falling victim to misinformation campaigns. Educating users to discern truth from fiction is just as vital as the measures taken by regulatory bodies and the platforms themselves.

The Paris cybercrime unit’s raid on X’s offices serves as a wake-up call for the entire social media industry. It highlights the urgent need for greater accountability and action to address the challenges of deepfakes and child safeguarding online. It requires collaborative efforts from governments, technology companies, and the public to create a safer and more responsible online environment. Only then can we hope to mitigate the risks and harness the benefits of social media platforms.

Comments are closed